Chapter 01

That story about a hackathon at a hospital.

Once apon a time there was a “data for good” meetup that helped organize a hackathon at a large regional hospital where people got to play with an anonymized patient records. These were records from the psychiatric department and it helps to understand that this department represented an “emergency group”. Patients would come in that needed help right now and right away. To help paint a clear picture: some of these patients would appear late in the evening and would also stay the night at the ward for their safety. Others would merely need a quick conversation with a doctor and be out of the ward in mere minutes, but some of the patients were dealing with very very heavy stuff.

The hackathon revolved around a dataset which had about three years’ worth of diagnosis data from patients. It included arrival times, departure times, observed symptoms, diagnoses, nurse/doctor interactions, and sometimes even prescribed drugs. Everyone was free to toy around with the dataset, under the condition that all lessons would be shared and that none of the data would leave the building.

As the day went on, groups formed, charts got made, models got trained and many snacks got eaten while the staff members asked some of the programmers if they could check some of their hunches. Then, one of the senior data scientists attendees, shouted that they had found something ... amazing!

It seemed that ones could predict the diagnosis of the patient with 90% accuracy! They even got double-checked and confirmed this on a separate test set. This was a bold claim, because most of the other attendees had a lot of trouble getting a model to perform more than 65%.

This sparked a lot of excitement and the hackathon attendees started to huddle around the laptop to exchange algorithmic notes. But it also surprised one of the junior data scientists at the event. While this junior attendee had only recently started writing code, it was someone who took a course in neuropsychology in college. It was a course where you'd also meet real life patients, which certainly leaves you with an impression of what life at the psychiatric ward can be like.

This was useful knowledge and started a spark of doubt. This hackathon, after all, was held at a psychiatric department with full-time staff that combined several specialities. Proper diagnosis of a psychiatric disorder can take hours, but also days, so it feels strange that a few hours of basic modeling on a small data export on would be able to do it without major flaws. After all, the dataset is but a mere glimpse of the information that would be available to the nurses and doctors. So how could a model perform so well? Was the data wrong perhaps?

It turned out that there was a reason why the model "performed" so well: it had a leak.

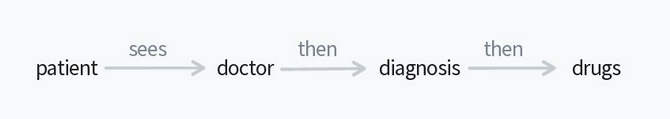

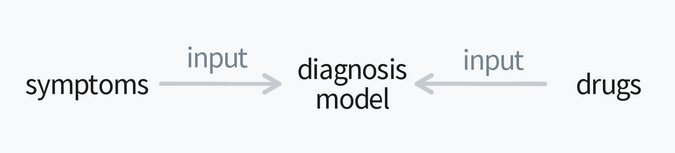

To understand this "leak", it helps to consider the order in which events happen in real life. In this hospital, a doctor would diagnose the patient, after which a drug would be prescribed. If you're going to make a model that tries to predict the diagnosis then in your model should only be allowed to see the data that is available at the time the patient walks in, not when they leave. But that's not what the consultant had implemented.

The junior data scientist was just about to confront the high accuracy numbers when, thankfully, another realization took place. A 90% accuracy sounds like very high accuracy, but apon reflection, maybe the number was too small?

Think about it ... if you know the symptoms and the prescribed drugs to predict the diagnosis ... then you know both the past and the future of the patient in order to predict the present ... so why can't the model achieve 100% accuracy? Under what circumstances would having the drug prescription and the symptoms not give you the information to make the prediction perfect?

It doesn't happen often, but it turned out that the data leak ... had a leak in itself.

After doing some more digging in the data and after talking to some of the staff, the junior data scientist found evidence that doctors don’t always prescribe drugs consistently. Certain drugs mainly got prescribed on Tuesdays and Thursdays, in a way that stood out once you knew what to look for. After checking in with the nurses they were able to confirm that this was certainly worth reviewing internally.

Appendix

This was a story of a hackathon at a hospital. But it’s also the story of a poorly designed classifier that was a blessing in disguise. If it weren't for the poorly designed classifier, one might have never investigated the leaks. So maybe the main lesson here is that doubt is a good thing.

If something in the predictive process sounds fishy, go deep and question it. Got a theory of what went wrong? Don't stop applying doubt until you've gotten a clear picture of the situation.