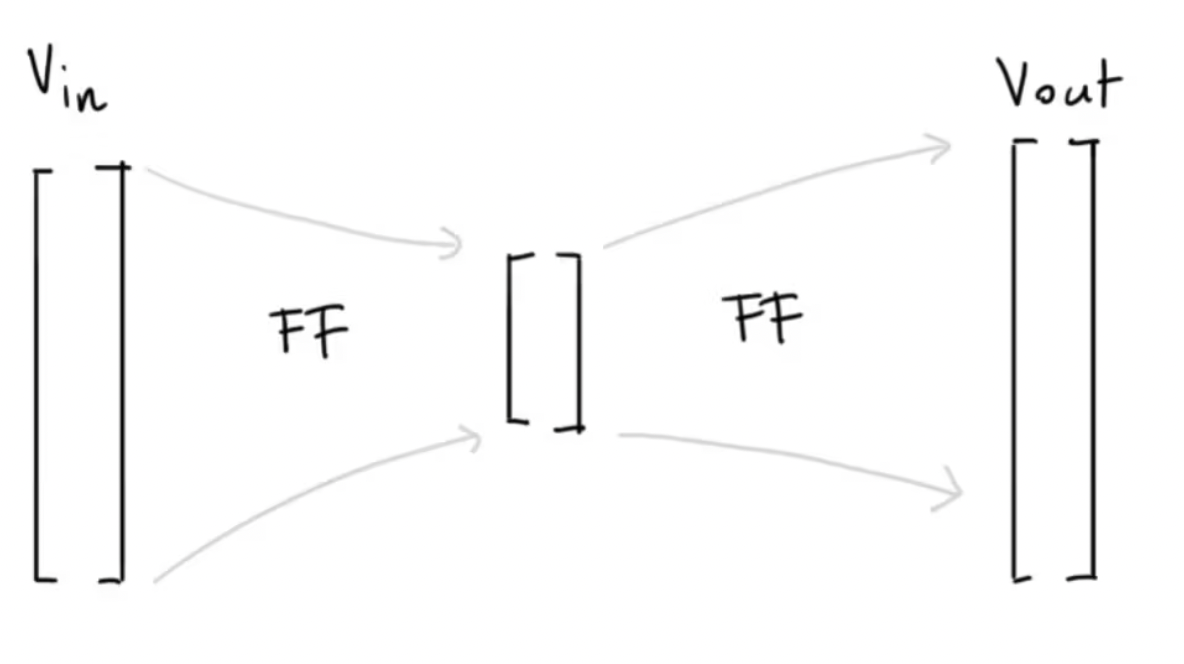

Sofar we've been discussing neural systems that train by predicting the next token or surrounding tokens. It had a diagram that looked like this:

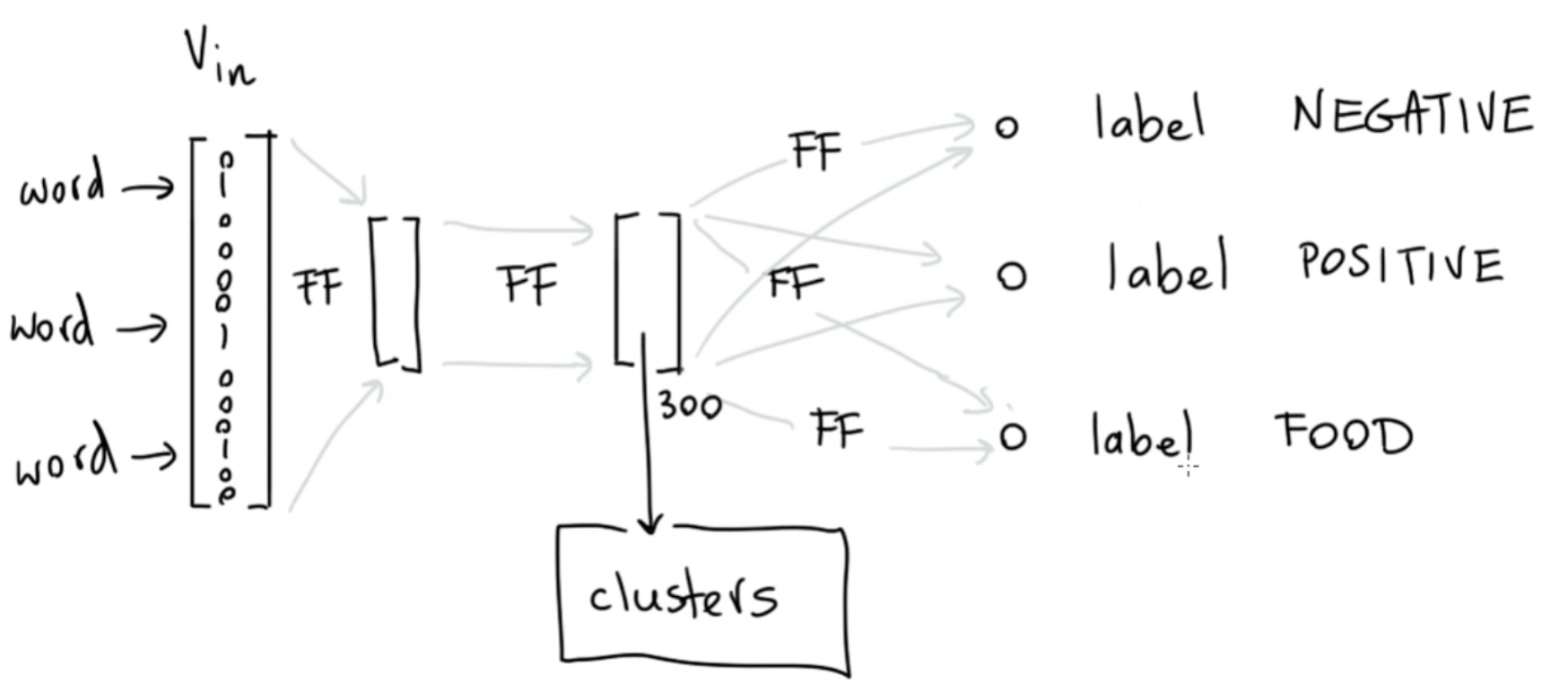

But we're free to pick whatever diagram we like. We can also train embeddings by just training on a few general NLP tasks. As long as we can calculate a gradient, we can update the system!

In this diagram, we assume a sentence as input and that we have three classification labels that we'd like to predict. Also in this system, you'd have a layer in the middle that you can re-use as an embedding.