Remember that embedding chart from the previous segment?

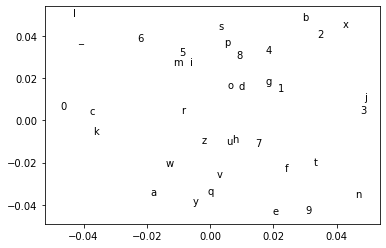

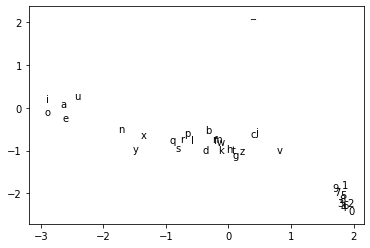

This is what the embedded space looks like before training. After training is done, it looks like this:

Notice how the vowels are all next to eachother? Just like the numbers?

General pattern

Let's go over the steps that happened here.

- We started with a dataset and a predictive task.

- By training for this task, we get a numeric representation as a side-effect.

- When we look at the numeric representation, it seems that clusters appear. These clusters may be useful for other tasks.

These clusters appear as a side effect of the training procedure, and it's a general "theme" when training any embeddings.